CPGDet-129: Neurolabs’ Retail-First Detection Dataset

We’re excited to open source one of Neurolabs’ synthetic datasets, CPGDet-129 that is used in our retail-focused flagship technology. Neurolabs is leading the way for Synthetic Computer Vision applied across the value chain of consumer product goods (CPG). CPGDet-129 has been used to train a synthetic computer vision model for the task of object localisation, for one of our retail partners, Auchan Romania.

Motivation

Within the wider field of computer vision, some of the main reasons for using synthetic data are speed of generation and quality of annotations. Adaptability of synthetic data to changes in domain comes in naturally, as one of the hardest things in production computer vision systems is maintaining a certain level of accuracy and ensuring the models are robust enough against data drift. In retail, a well known real dataset SKU-110K is used as a standard benchmark for object detection. However, it doesn’t contain instance segmentation annotations, as most likely, this would be too costly to acquire. Standard Cognition recently released StandardSim, a synthetic dataset for retail aimed at the task of change detection for autonomous stores.

CPGDet-129, is the first of its kind public synthetic retail dataset constructed specifically for the object detection task and the challenges that arise from training such computer vision models.

Engine

Neurolabs’ internal data generation engine allows programmatic control over the parameters of a 3D scene. In this white-paper, we will discuss some of the most important features of generating synthetic data for the task of object localisation, as well as the importance of consistency between real and synthetic data annotations.

On this occasion, we’ve released all products as one object class.

Dataset Specifications

Using our synthetic data generator and one scene developed in Unity, we’ve created 1200 images of products on shelves, together with their 2D bounding boxes and segmentation masks, with both structural, i.e how the products are placed, and visual appearance, variation. In total, CPGDet-129 contains 129 unique Stock Keeping Units (SKUs) and ~17,000 product annotations. We hope to engage with the wider community of synthetic data enthusiasts and practitioners through this release and encourage discussions on the improvement of using synthetic data for model training, as well as to further advance Synthetic Computer Vision.

Structural Variation

One of the ways to achieve a high degree of variation is by manipulating the number of objects in a scene as well as rotation and relative positioning. In our case, the three main structural components are:

- instancing vs. no-instancing and stacking

- dropout

- rotations & translations

In a usual supermarket, most products are grouped by category and brand, and can be found in multiple positions. Randomising rotations, scale of products as well as translating products in different parts of the shelves, sits at the core of our generation engine.

In addition, we define instancing, whereby we group products together based on their class or category association. This is the first step towards structural realism.

The next step is achieved by stacking products depth-wise horizontally (XY stacking), or vertically (XZ stacking), as well as combining the two as seen in above images. This allows us to create dense scenes, and natural occlusions, further increasing variation.

In the case of no-instancing, products are randomly placed on a shelf [Fig. 2]

Although unlikely to happen in a real setting, this introduces a different kind of variation and can be seen as a form of domain randomisation.

As with the traditional dropout component in neural networks, where one deactivates nodes with some probability, we’ve created the equivalent for synthetic data, whereby one can remove objects from the scene with some predefined probability. This leads to more variation in dense scenes and furthers the structural realism, as most real supermarket shelves are not full and, oftentimes, quite messy.

Finally, physical simulations of objects interacting on a shelf is another interesting approach which yields good results, but we have not included these in CPGDet-129. However, there is an inherent difficulty in making sure that objects interact according to the laws of physics, especially for soft bodies and a difficulty in terms of 3D modelling of these assets accordingly.

The recently released Kubric, and presented at CVPR 2022, is an open source generation engine from Google Research that is making this possible for rigid bodies, by integrating with the simulation engine PyBullet.

Visual appearance and post-processing effects

In terms of visual appearances, we’ve used the High Definition rendering pipeline, HDRP, and light probes are baked into the scene. Firstly, we vary the light intensity, and camera location. In one scenario, we let the camera roam free, whereas in another one, only the camera depth is varied. This is one of most important components, as mimicking and perturbing the camera location only slightly from the real scene yields better results.

Some of the most important features in terms of post processing effects where bloom, colour adjustment and lens distortion.

Data-Centric Validation

Ultimately, the synthetic data trained models and the domain adaptation techniques used to bridge the syn2real gap are the two components that prove or disprove the success of the synthetic data. However, as part of the data-centric AI movement, we’ve noticed that validating the synthetic data not only empirically, using the model as a proxy, but also independent of the task, is equally important.

Firstly, there needs to be a consistency between human labelled real data annotations and synthetic data annotations. Because this depends on who labels the data, we’ve released a script together with the dataset, that filters out annotations based on their visibility or pixel area. In CPGDet-129, the number of occlusions and annotations per image is very high before filtering out based on visibility and area.

One simple validation tool for testing the consistency of annotations across synthetic & real is to compare the ground truth annotations distribution with the synthetic data one, post filtering. One can see from the below plot, that the synthetic distribution encompasses the real data one. Unfortunately, at this time, we are not able to publicly release the real data.

To provide further arguments for the annotation consistency hypothesis, we’ve further observed in our experiments that:

- AP75 is very sensitive to the distribution shift between synthetic annotations and ground truth annotations. This shift often appears because of the inconsistency between human and rendering annotations

- Synthetic dataset size is important for predicted bounding box accuracy

Conclusion

As mentioned in a previous post on real vs. syn data, CPGDet-129 was used to achieve 60% mAP on the real test set and increase the robustness of the model, all without any real data used for training.

With the public release of CPGDet-129, we hope to get feedback from others and learn from the community about what challenges arise from using synthetic data and how we can mitigate them.

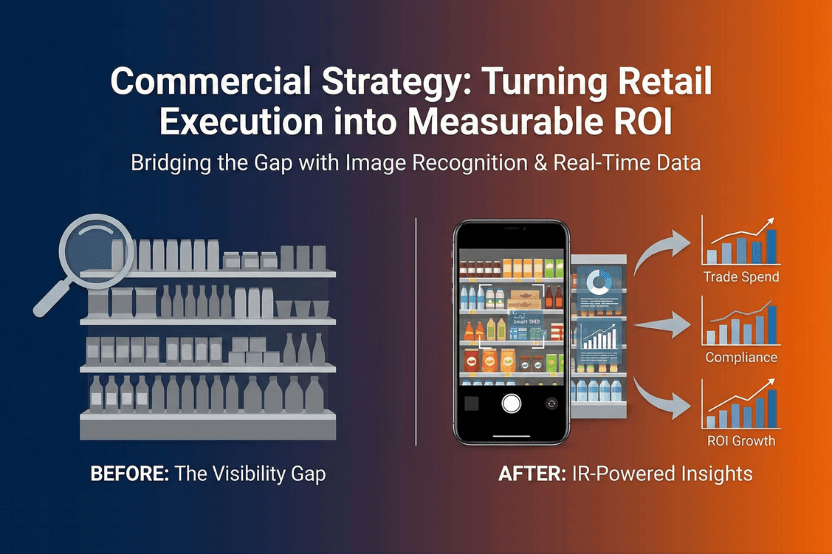

Retailers worldwide lose a mind-blowing $634 Billion annually due to the cost of poor inventory management with 5% of all sales lost due to Out-Of-Stocks alone.

Neurolabs helps optimise in-store retail execution for supermarkets and CPG brands using a powerful combination of Computer Vision and Synthetic Data, called Synthetic Computer Vision, improving customer experience and increasing revenue.